What Is Verifier?

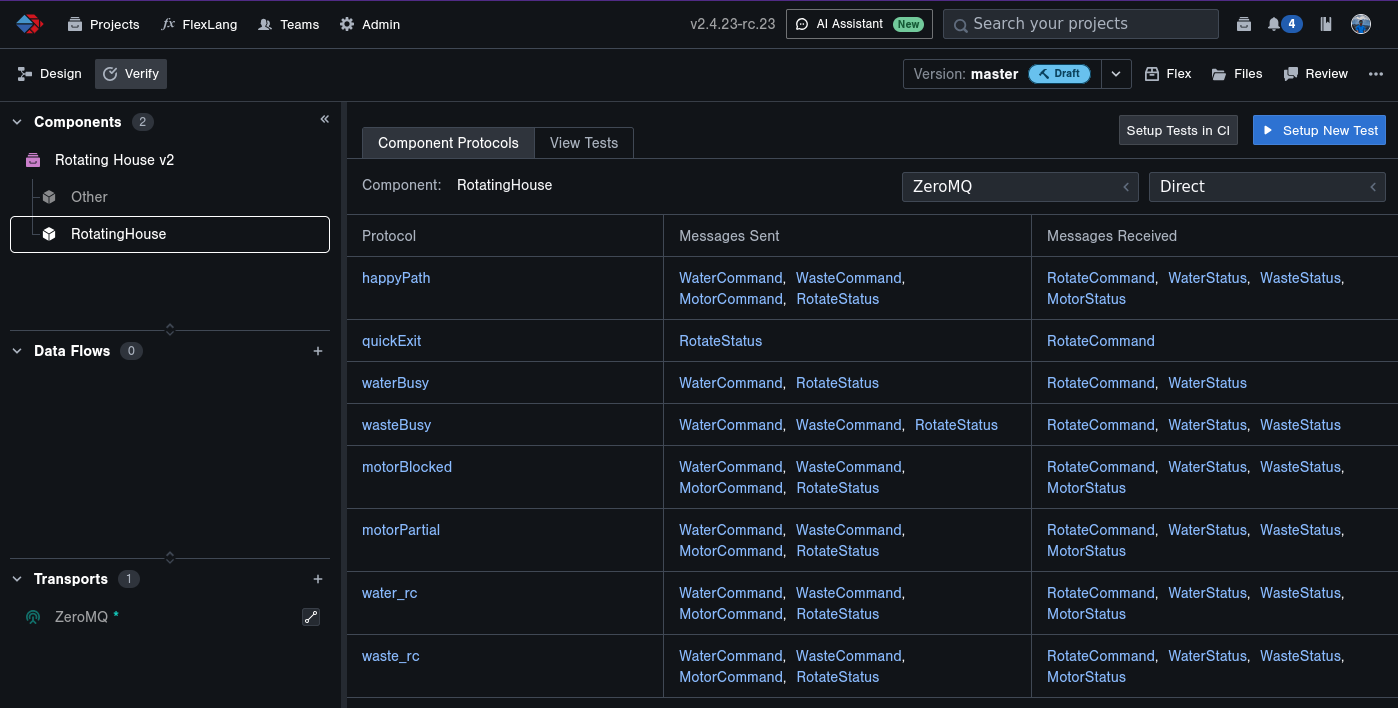

The Verify mode of a project allows you test your software component at runtime using Tangram Pro™ Verifier, checking its performance against its Flex protocol model so that you can be more confident that your software is doing what it's supposed to do.

- As a software engineer, Verifier allows you to check that your software adheres to a model as you develop and modify the code.

- As a model-based systems engineer, Verifier can let you validate third-party code that's been delivered to you instead of needing to trust it blindly.

- As a system integrator, Verifier provides you with a method to test each component in isolation before going through the time and effort of integrating an entire system.

Learn More

If you'd like to learn more about why Verifier operates the way it does, read on. Otherwise, you may find these other pages useful:

- Tutorials: Guided tutorials

- How-To Guides: Step-by-step instructions for common tasks

- Reference: Configuration, constraints, and other topics

How It Works

Tangram Pro™ Verifier tests a single software component based on its "interface": the message it sends and receives, the order of those messages, and their contents.

Tangram Pro™ will run your component software in isolation to test it, and run an environment that acts as the inverse of the model to emulate the rest of the system: when the component expects to receive a message, Tangram Pro™ sends it, and vice versa. This information is detailed in a protocols, which contains relevant details about the messages, their ordering, and any requirements for their contents. Tangram Pro™ can do "validity" checks to test that a component handles messages correctly, as well as "invalidity" checks to see what the component does when it receives bad messages. At the end, Tangram Pro™ generates a report containing the results of testing.

Comparison to Other Testing Methods

Tangram Pro™ Verifier falls into the class of testing tools which checks that your software does what it's supposed to do. Software testing tools in this class fall roughly into one of three categories:

- Unit Testing

- Property-based Testing

- Integration Testing

We'll compare Verifier to those other tools with an analogy:

Imagine you're designing a new calculator:

- Unit Testing: You want to make sure the calculator can handle addition correctly, so you write a test that ensures your calculator software correctly shows

2 + 2equals4. - Property-based Testing: It would be impossible to test every combination of numbers by hand, so you use another tool to write a test that shows your code correctly calculates that for any

xandy,x + yalways equals the correct value. - Integration Testing: While unit tests and property-based tests show that your code does the right thing in a strict, lab-like environment, you would still never send the calculator to production until

you had tested the physical calculator by pushing the buttons

2,+,2, and=and seen the correct result in the display.

That last type of test is integration testing, by getting you get as close as possible to testing your product in the real world before you ship it to other users. Verifier falls into this last category, by letting you run your component and test it while it runs.

Verifier is not a replacement for unit testing or property-based testing: it's a supplement. It's a powerful supplement that can find issues that the other types of testing miss, and because Verifier doesn't need to read your code it's easier and quicker to use. However, the other types of testing absolutely still have their place!

Other Integration Testing Tools

There are other integration testing tools, usually developed by companies to cover a very specific software system and a specific message set. These testing tools are not reusable unless extra development time is spent on them to retrofit them to each new message set individually, and due to the work required in creating them from scratch they tend to lack in features:

- They can only send and receive predefined specific messages (often stored in XML or binary files)

- They only use a single transport

- They lack configuration

By comparison, Verifier can:

- Send and receive any message that's defined in Flex and has an appropriate serializer

- Use constraints specified in Flex protocols to make message contents dynamic and responsive, similar to the property-based testing shown above, rather than always using the exact same messages

- Send and receive messages over any of the supported transports

- Be configured to test any containerized component supporting the above points

You can find more discussion on what sets Verifier apart below.

Verifier In Depth

Why Containerized Components?

Containers (specifically containers which follow the Open Containers format, such as Docker or Podman) provide a common interface for packaging and running software. This format allows you as a user to wrap your component and any of its necessary dependencies to provide a single image to Verifier for testing.

Containers are already a standard in the software industry, and are very likely to be the way your component is deployed already.

Why Test Interfaces?

At the risk of sounding repetitive, we'll mention again the goal of Verifier: given a known model for how a component should operate, Verifier checks that the software operates correctly based on the messages the component sends and receives.

This is very useful when testing software that has been defined in a Model-Based Systems Engineering tool such as Cameo or Tangram Pro Designer. Without Tangram Pro Verifier or a custom-built integration testing tool, engineers have to manually audit the code against the model. Verifier provides an adaptable and automated alternative, vastly speeding up the process of testing software. If you're interested in integrating third-party software, testing your own code as you develop it and make changes, or swapping multiple components that are supposed to be interoperable, testing the interface of a component is a highly valuable way to handle verification.

Why a New Tool?

When we were considering whether or not to create Verifier, we started with the idea of "fuzzing". You may already be familiar with tools like libFuzzer or AFL++. We quickly discovered that fuzzers take far too long to get to the meat and potatoes when testing a component's interface due to the many details involved in messages, such as serialization or checksums. The amount of time required for a fuzzer to figure out the correct serialization structure and get every part of the serialized message's byte format correct made it infeasible to apply to arbitrary components in a short time frame.

So we wrote our own tool: a tool that builds on our Flex language and Tangram Pro to quickly build integration test suites for components using any set of messages that can be expressed in Flex, using a known set of serializers. This approach makes the tool highly reusable, so we've been able to add more configuration and capability, through configurable protocols.

How Does Verifier Test Components?

Verifier receives messages from the component under test, and can also send messages to that component. In this way, Verifier can emulate the system that the component will be integrated into from an interface point of view: it doesn't recreate and mimic the entire system, but instead provides the counterpart to the component's message model, allowing the component to interact with an outside party just as it normally would.

To the user, a protocol is simply an ordered list of messages. To Verifier, it's a sequence of events that begins when the component starts. This sequence of events will track the component's lifecycle (from start to stop), messages, and other extraneous events (like timeouts when no message is received). Message protocols created by the user are transformed into these event sequences when Verifier starts, and then the real-time events captured by Verifier when your component runs must match the expected events.

Verifier iterates through the defined protocols, attempting to validate them one at a time. However, Verifier will pay attention to component behavior even if it deviates

from the expected protocol events IF the component follows a different valid protocol. While it may not be the protocol Verifier intended to test, it is

still valid behavior! Depending on your configuration, Verifier may re-attempt the test that couldn't be validated

on the first try, but when the protocol runs out of attempts it will fail validation: if your component is acting unexpectedly consistently, it's most likely a bug!

For example, consider two overlapping protocols with sequences of messages that look like this:

Protocol 1

Protocol 2

The two protocols overlap at the beginning, and deviate only on the last message when the component sends one of two possible messages, perhaps based on some predictable

criteria, but perhaps random. If there are no constraints defined which allow Verifier to determine whether message A or message X is the only valid message, Verifier will

accept either and continue on with the protocol that is still valid given the received messages.

If Verifier is trying to test Protocol 1 but receives message X, it will throw out Protocol 1 and continue onwards by sending message Y. When Protocol 2 completes,

Verifier will mark it as validated. If the user has specified more than one retry in the configuration, Verifier will attempt to validate

Protocol 1 again until it has been validated (a valid message C is received instead of X) or Verifier runs out of retries for the sequence.

Message Validation

When Verifier receives a message sent by the component, it must match that message against one of the expected messages as defined in the Flex protocol. The message must meet several criteria to be considered valid:

- Is the message the correct type of message for any currently valid sequences?

- Can the message be deserialized?

- Do the contents of the message pass validation based on protocol constraints?

If any of these is not true, the message fails validation and any protocol requiring this message to be valid will be marked as failed.

Message Generation

Verifier will use protocol constraints (e.g. assuming or where expressions on recv and send statements) for the messages it sends to the component, as well. Adding constraints to messages will allow

Verifier to send valid data to components, which should then send valid data back as a response. This prevents sending bad data to your component (if you so desire),

speeding the test process and letting you dictate the data your component should properly respond to.

All of the same constraints are available for both output message validation and input message generation.

How Do We Test Verifier?

Every Verifier release (and in fact, every Verifier commit) runs through a GitLab pipeline that checks code changes with a series of automated tests:

- Unit tests: Like we said earlier, these are still useful and have their place! We use it in many to test many aspects of Verifier, here are a few ways:

- Configuration & message model parsing

- Constraint resolution

- Message validation

- Message generation

- Sequence validation

- Integration tests: Of course we're going to have integration tests for Verifier! We have a custom test harness that wraps Verifier and a diverse range of

components to run Verifier through many different scenarios and check the results for regressions:

- Tutorials and scenarios from internal events

- Integration with Tangram Pro and GitLab

- Other scenarios which test both success and failure cases

- Manual regression tests: Verifier, along with all of Tangram Pro, is tested before every release by our QA team